Revolutionizing Operations: The Future of Industrial Data Platforms

Bridging IT and OT with an Operational Data PlatformIn today’s industrial landscape, digital transformation is essential for enhancing efficiency and innovation, with data playing a pivotal role. Operational data platforms emerge as crucial solutions, bridging the gap between IT and OT to enable seamless data convergence and utilization. They are tailor made for sensor/IIoT data and recognize the importance of local reliability and global scalability. These platforms break down traditional silos, fostering a unified data ecosystem that supports real-time decision-making and continuous improvement. This guide explores the transformative power of operational data platforms, addressing the challenges of scaling digital initiatives, sharing expert insights, and providing real-world examples of successful IT/OT convergence, emphasizing their strategic importance for achieving long-term success in the digital age.

Chapter 1 — Answering the WHY question

It’s the Platform, Stupid!

Why is predictive maintenance so hard? Because maintenance technicians don’t want to spend hours digging through Excel sheets. When the buzzword was “Augmented Reality”, we were not looking for new ways to draw something in 3D (the gaming industry is way more advanced than the stuff we were building). No, we needed to integrate several data sources into one application and that’s exactly what was holding us back. Same now for Artificial Intelligence: the algorithms are not the problem, the data is.

So what is the problem if we cannot scale?

Every data project becomes a massive undertaking because you need to untangle the data spaghetti over and over again!

To make matters worse, on average 70% of these projects will fail or be stopped (hopefully early enough), and for the 30% that succeed, learnings and results will typically not be shared outside of the project scope. Finally, extracting, transforming and loading data into whatever system you are using requires very specific data engineering skills making the data engineers a bottleneck for every report/trend/calculation being requested.

But…

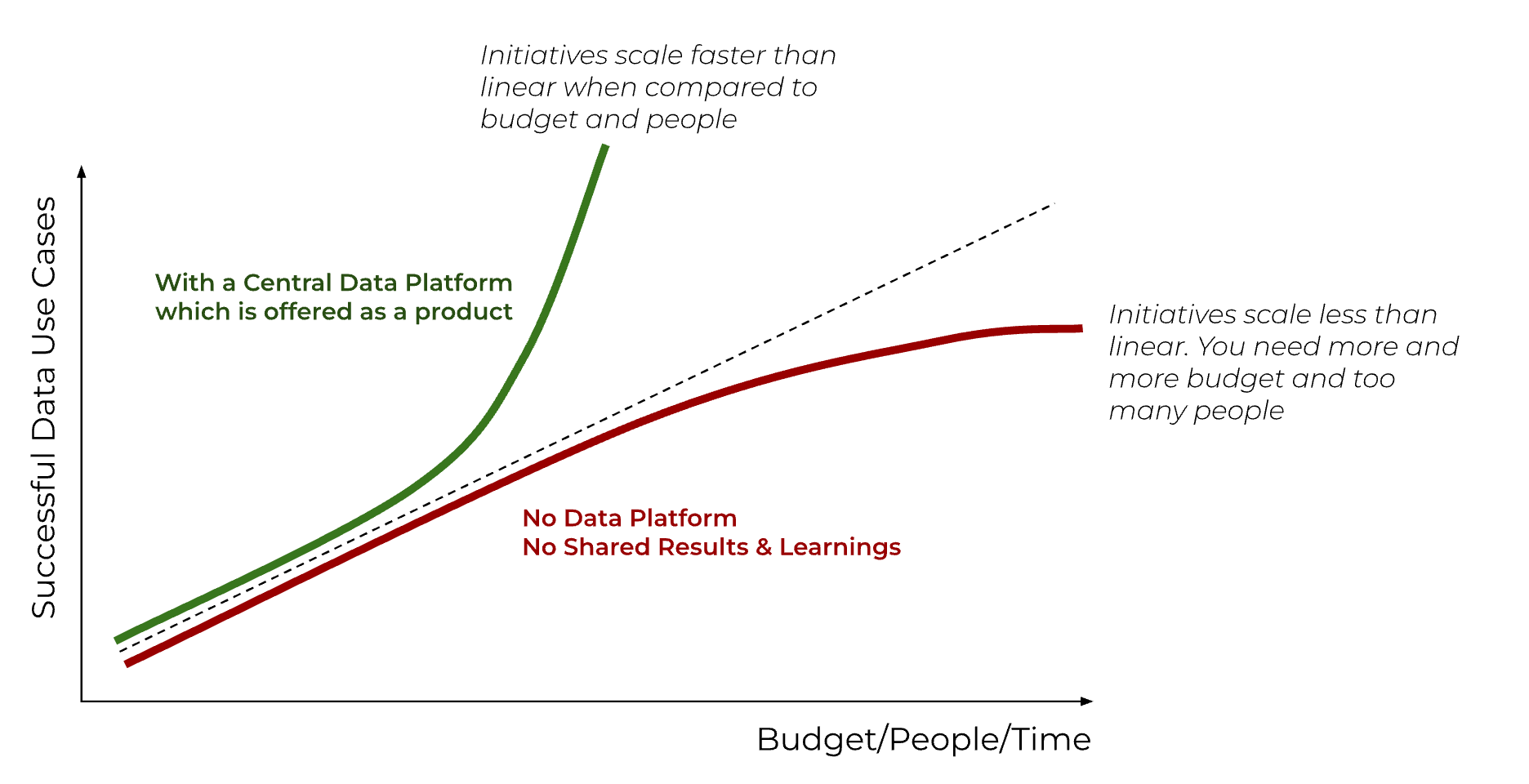

What if we would have a Data Platform integrating all OT/IIoT and maybe even IT sources with their context?

Allowing us to scale the outcome of our data initiatives at least better than linear or even exponential ?

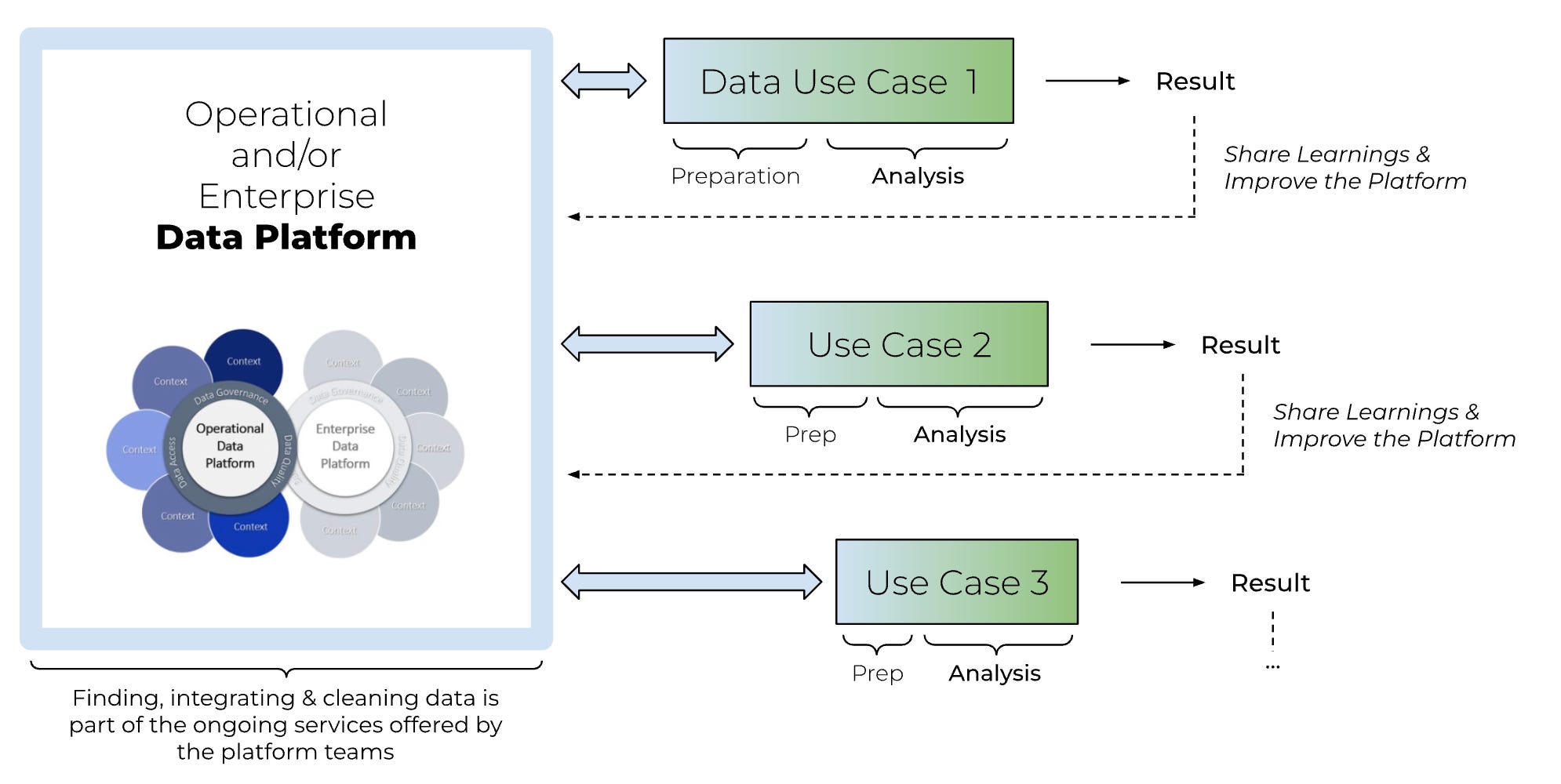

Instead of reinventing the wheel for every data use case, we should first invest in an Operational Data Platform.

This data platform is managed by a platform team. Their task is to develop and maintain the platform, ensuring it meets the business’s needs. This is a product with a service model. The team operates autonomously, but they must service their users. They have full ownership of building, running, and maintaining the platform. The internal services they offer simplify life for the rest of the organization.

The Platform Team needs to ensure that the platform provides good quality data in context, and easy access for all types of business users (management, data scientists, reliability engineers, third parties…). They lead the governance activities but are never the data owners—that responsibility lies with the business.

Chapter 2 — The IT and OT view on data

Data Wars: The Lake Strikes Back

On The IT/OT Insider, our mission is to bridge the gap between IT and OT, fostering a unified understanding that drives innovation and efficiency. In this chapter, we embark on a journey to demystify IT-related data concepts for our OT colleagues and vice versa.

Let’s start by introducing two fundamental concepts from the IT world: Data Lakes and Data Warehouses.

The IT view on Data Lakes and Data Warehouses

Credit where it’s due: the concept of a Data Lake and a Data Warehouse comes from our friends in IT, who have long been grappling with the challenges of storing and managing vast amounts of data. These concepts are pivotal in the IT domain, but understanding them is equally crucial for OT professionals aiming to leverage data for operational excellence.

Data Lake

-

A repository for storing unstructured data,

-

Stores raw data,

-

Because there is no chosen structure yet and the data is still raw, a data lake supports more advanced analytics use cases (you often want to have the raw, unprocessed data).

Data Warehouse

-

Stores structured data from different sources,

-

Stores processed data (for examples: aggregations),

-

Clearly defined data schema per use case,

-

Because the Data Warehouse typically holds processed (not raw) data, you might lose some fidelity/granularity (for example: only a sum or count of certain records, not the individual ones) making it less suitable for (advanced) data science projects.

The OT view on Data

Your control system is designed to capture and store sensor (time-series) data generated by industrial processes and equipment. It collects data points at different intervals from various sensors, devices and machinery. That includes measurements like temperature, pressure, flow, voltage and more.

These data points are time stamped and stored, creating a historical record of how the processes have evolved over time. Some values might be stored every second, others every minute, hour, day or at completely random intervals (this randomness makes it impossible to store these values in a traditional relational database at scale).

In most cases the Time Series data is stored in a Process Historian on Level 2 or 3 of the Purdue Model. This piece of software is designed to store and access time series data in a very efficient manner.

At this point, our time series data is still raw and unstructured.

Production context – such as start/end batch or product made – might be available to some extent in a Manufacturing Operations Management (MOM/MES) system, but is notoriously difficult to combine to time series data.

Furthermore, as we would like to encourage everybody in our organization to start working with data (often referred to as “Citizen Data Science”), we now come to the conclusion that only a small part of those Citizens understand that L15.B1.T01A.PV refers to a temperature sensor in the baking oven. In most cases the Asset Context is missing as well.

Chapter 3 — Towards a platform

The Operational Data Platform:

How the future of OT Data will look like

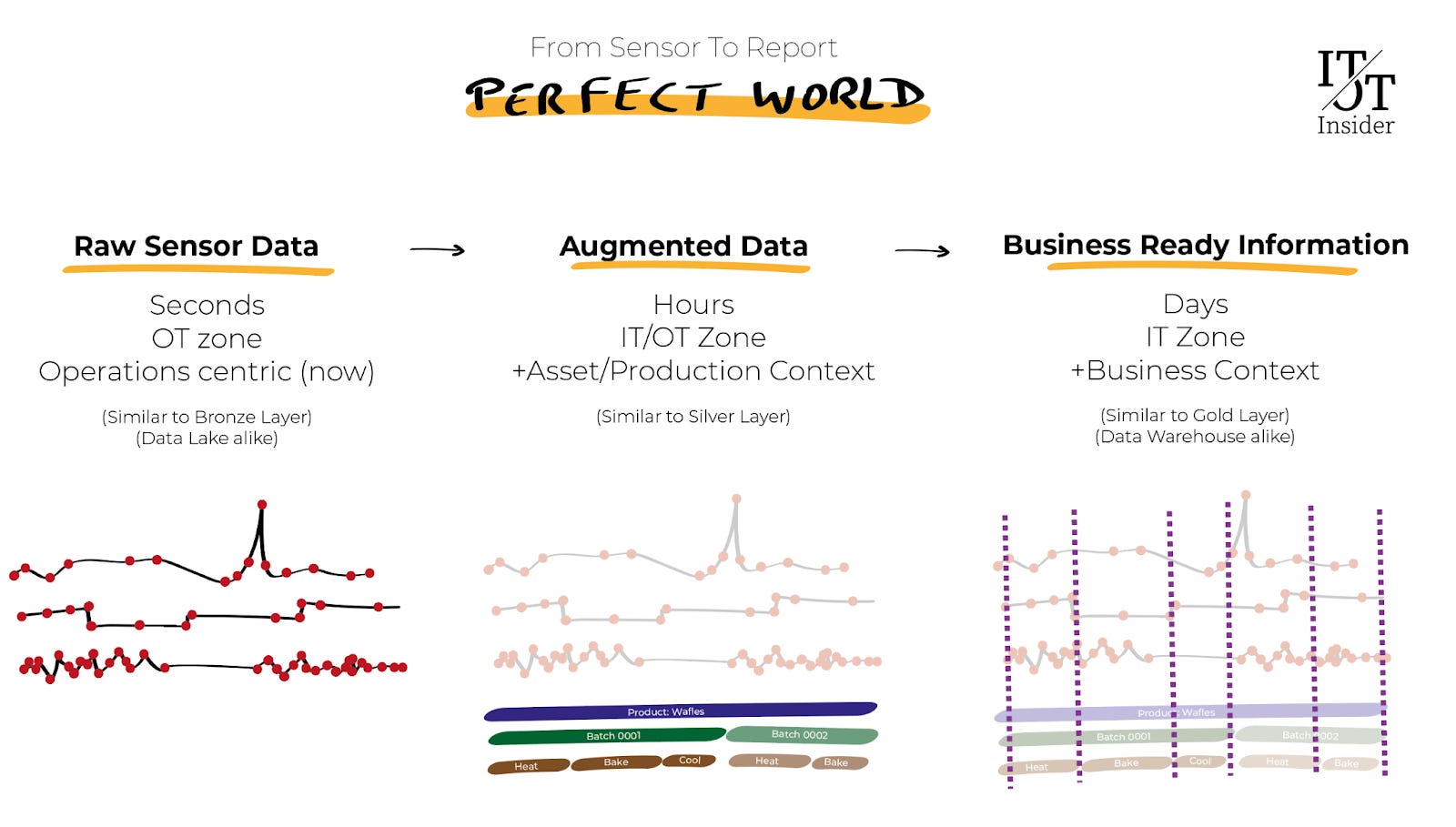

Similar to a Data Warehouse, we need to introduce the power of context into our OT environment. It will help us to make sense of time series data. In the above infographic, we can now easily see which product we were producing, in which batches and also in which stage.

Types of Contexts in a Manufacturing environment

Asset Context gives us insight into the physical assets in our plant, it is a rudimentary Digital Twin. This data can often be found in Engineering or Master Data systems.

Production Context allows us to link the data to the actual manufacturing process which took place. This data can often be found in a Manufacturing Execution System (MES). It helps us to identify the product which was made, the order/batch it belongs to, the materials used, the operator/shift/team who worked on it and much more.

Maintenance Context can give direct insights in the OEE of our equipment. Understanding the relationship between planned and unplanned maintenance to certain process conditions can again be the starting point of a data exploration project. We do need to note that today lots of technicians still use pen and paper. Accurate data, correct timestamps, linked assets and the elimination of free text are essential prerequisites.

Other dimensions are possible too!

We might have a Financial Context (eg: direct input on the prices of energy, raw materials and so on), a Quality Context (eg: what is the correlation between product quality and process parameters) and many others.

Crystal ball:

How the future of an Operational Data Platform might look like

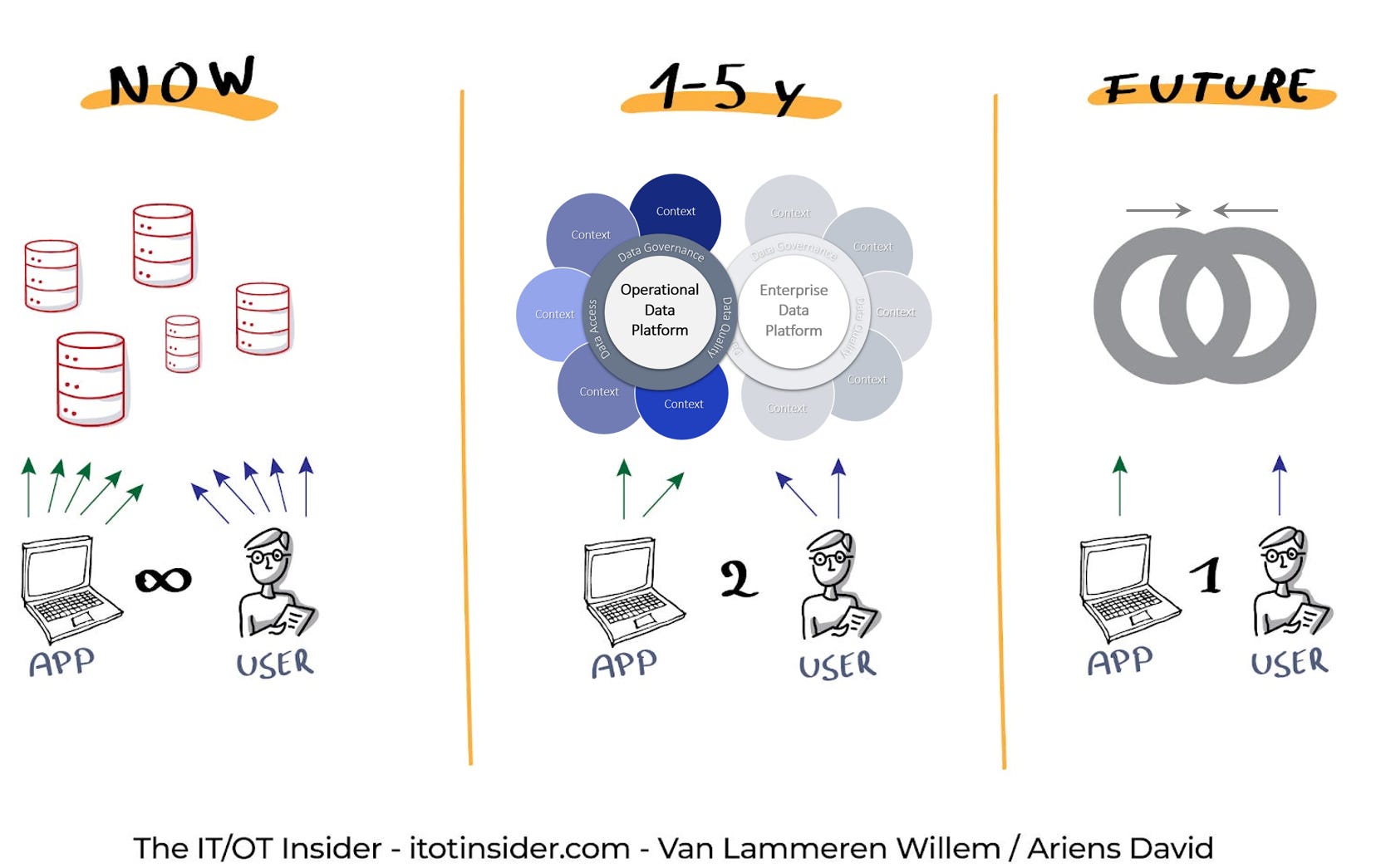

Now

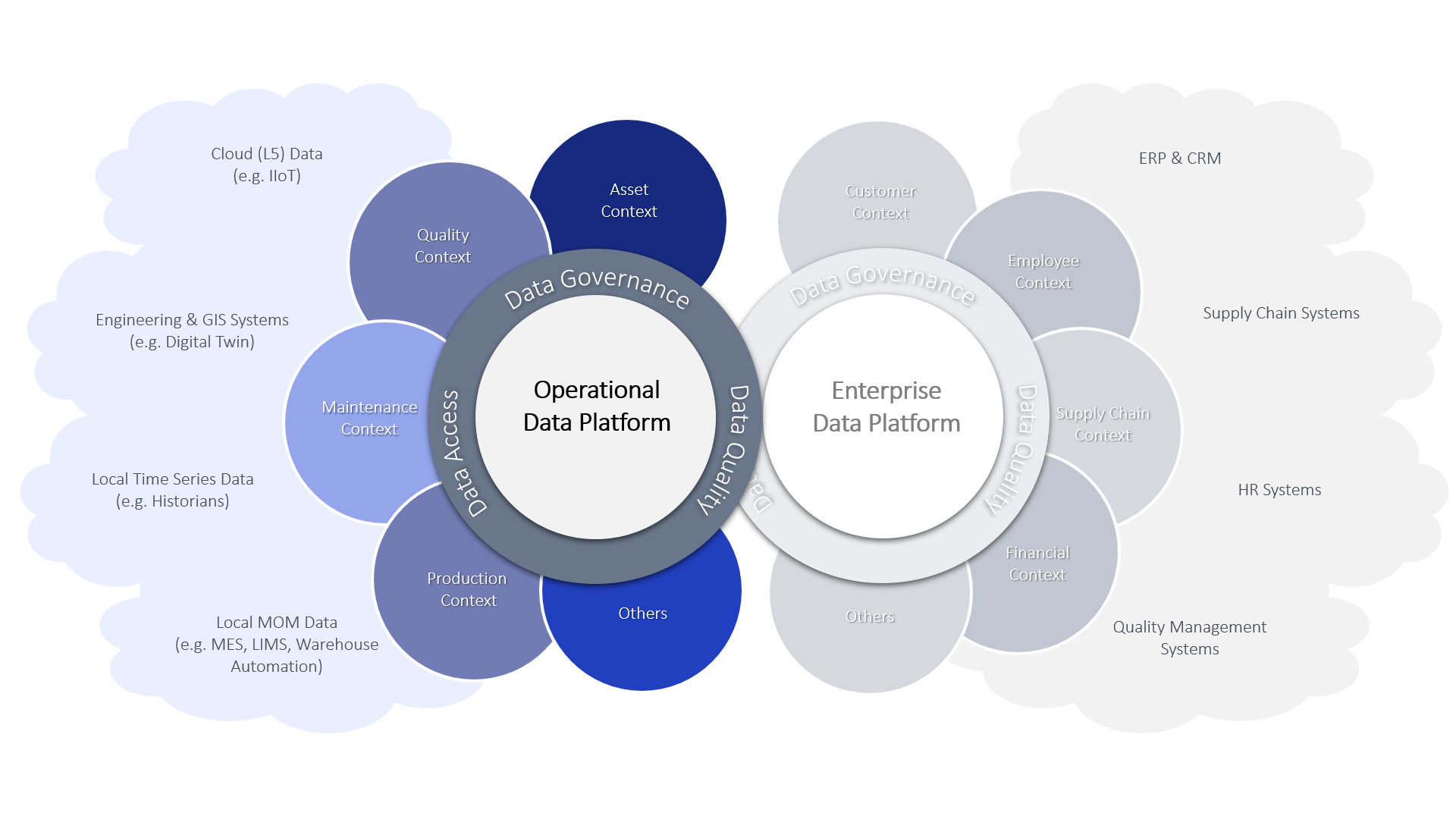

Most systems holding data are still on an island somewhere. Furthermore, OT systems are typically on premise (local) systems whereas IT systems are almost always cloud based. Many companies are trying to introduce more powerful OT systems/historians capable of creating part of this context. However, we don’t see real “platforms” yet.

When companies use their existing Data Lake for OT use cases, we typically see that only a small part of the data gets duplicated (e.g. for just a handful of use cases). As many existing IT systems are not capable of dealing with time series data, interpolating data is often necessary (the purple dotted line in the first infographic). This implies that you already have made several assumptions on how the data will be used later on.

What is even more troublesome, is that current IT systems cannot deal with typical OT-style contextual information such as Asset Context, Meta Data (such as limits or units) and definitely not the more advanced contexts as introduced in the previous section.

Mid Term (1-5 year)

The Operation Data Platform has to be Cloud native (private and public). Not because there is no future for on-premise software, but because it is the only way to exponentially scale future use cases (easily share reports over several locations, use open-source tooling for data science, share data with 3th parties etc…).

Several vendors are today offering solutions which somewhat go into the direction of an “Operational Data Platform”. Today, those platforms are still in a premature phase. They typically focus on a limited number of use cases. Introducing context is often missing or available in a limited way. However, we expect that this will make or break future solutions.

Integration between IT and OT platforms (e.g. access ERP data) will be key in getting the platform accepted by all communities. However, fully integrating the IT infrastructure with OT data sources is still a step too far. Providing the choice between public and private cloud will be a critical acceptance factor for the more security-sensitive industries.

Future (+5 years)

IT and OT technologies and cultures will merge. We don’t know when, but at a certain point in time the differences between IT and OT will really go away. Still sci-fi today, but who knows what the future will bring 😉

Chapter 4 — Towards a platform

Why Data Quality is Essential

It’s now time to focys on the role of Data Quality in your Operational/Industrial Data Platform. When is data ‘good’?

Why does that matter for IT and OT? Why is it even more important for users consuming your data products?

The Perfect World: From Data to Business-Ready-Info

Here is how we rely on data today:

- Sensors (either traditional or IIoT) capture data which gets stored in an historian somewhere.

For those in Stage 1 of the Data Maturity Model: this is all you have available as users directly access your historian. Those in Stage 2 or further can take additional steps.

- Sensor data gets augmented in the IT/OT zone, for example by MES systems including Asset and/or Process Context (‘what was I producing’, ‘where’ and ‘when’).

- Finally, enhanced and transformed data is made available as business ready information to the end user.

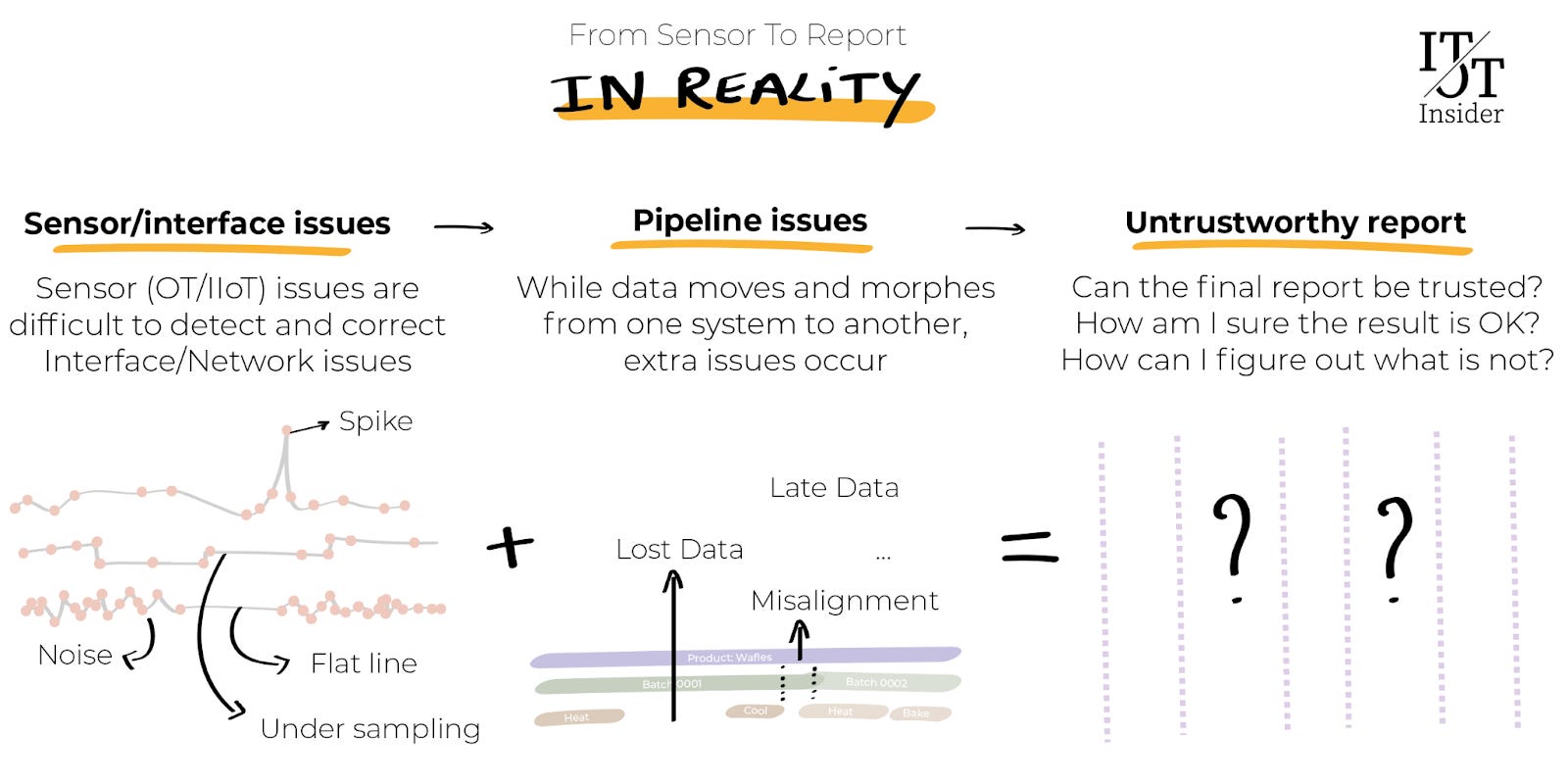

Sounds pretty straightforward, but lots of things can (and do) go wrong.

Data good enough for Operations isn’t per se good enough for Analytics!

Data issues, somewhere in the pipeline from sensor to final report, are difficult to detect and correct.

You might just sum up data containing an outlier, resulting in totally wrong conclusions (1000000 instead of 1000). Or a sensor might have been given a flatline reading for days, weeks or even months without your knowledge.

When we run automatic reports or use data as part of (advanced) mathematical models (think: AI, Digital Twins…), we want to make sure we can rely on the outcome.

The realization is growing that data is a strategic asset and it is about time we start treating it that way.

Chapter 5 — Towards a manageble and scalable platform

The Unified Namespace (UNS) demystified

Some concepts gain more traction than others, and Unified Namespace (UNS) is one that stands out. In this part we explore the Unified Namespace as a possible design pattern (or even an ‘aspirational state’) when building an Industrial/Operational Data Platform.

The Perfect World: From Data to Business-Ready-Info

A Unified Namespace is

(1) an organized and centralized data broker,

(2) depicting data in context from your entire business

(3) as it is right now.